Some of the country’s most powerful men are panicking about testosterone levels. Tucker Carlson’s 2022 documentary The End of Men blamed declining testosterone for the supposedly rampant emasculation of American men. Influencers issue warnings that “low T is ruining young men.” Recently, the Department of Health and Human Services has gone all in on the hormone, publishing new dietary guidelines meant to help men maintain healthy testosterone levels and considering widening access to testosterone-replacement therapy. Kush Desai, a White House spokesperson, told me in an email that “a generational decline in testosterone levels among American men is one of many examples of America’s worsening health.”

Health and Human Services Secretary Robert F. Kennedy Jr. has gone further, calling low T counts in teens an “existential issue.” The secretary, who at 72 has the physique of a Marvel character, has touted testosterone as part of his personal anti-aging protocol. He also recently proclaimed that President Trump has the “constitution of a deity” and raging levels of T.

American men are responding by attempting to increase their testosterone levels—whether they need to or not. Low-testosterone clinics, many of them based online, have proliferated, promising men a way to “get your spark back” or “reclaim your life.” So have supplements and accessories designed to support testosterone health—you can buy an ice pack designed specifically to keep testicles cool in a sauna. Recently, a friend who questioned his dedication to testicular health after seeing ads for testosterone boosters asked me if he should buy nontoxic briefs from a company called (yes, seriously) Nads.

Research has shown that average testosterone levels are indeed declining in American men. The primary study on the slump, published in 2007, followed Boston-based men over two decades and found that their levels had declined significantly more than would be expected from aging alone. Since then, numerous studies have documented the same trend among various age groups. The dip can probably be attributed to a number of factors, including rising obesity rates, widespread chronic disease, and sedentary lifestyles, Scott Selinger, an assistant professor at University of Texas at Austin’s Dell Medical School who studies testosterone, told me. Hot testicular temperatures—because of tight-fitting clothes, excessive sitting, or, more dramatically, a quickly warming climate—may also affect testosterone production, Selinger said.

[Read: RFK Jr.’s testosterone regimen is almost reasonable]

Low testosterone really can be debilitating. Deficiency is linked to low libido, erectile issues, fatigue, heart disease, osteoporosis, anemia, and depression. But the prevalence of testosterone deficiency is hard to define. It ranges from 2 to 50 percent across studies and differs greatly by age. Speaking about men’s-health experts, Abraham Morgentaler, a urologist specializing in testosterone therapy at Harvard Medical School, told me, “I don’t think too many people are really concerned” about population-level declines. Major professional groups whose members study and treat testosterone deficiency—the Androgen Society, the Endocrine Society, the American Urological Association—haven’t launched any specific initiatives to combat low testosterone, Morgentaler said.

They are nonchalant in part because the average decline in testosterone is not especially large. The “normal” range for testosterone isn’t well defined; limits set by different medical societies span from about 300 to 1,000 nanograms per deciliter. The Boston paper found that the average participant’s testosterone levels dropped roughly 50 nanograms per deciliter every eight years or so, “which for some people can make a difference, but for a lot of people, it doesn’t,” Selinger said. Experts are more concerned about what might be driving the decline: “I wouldn’t say there is an epidemic of low testosterone,” Franck Mauvais-Jarvis, an endocrinology professor at Tulane University School of Medicine, told me. “The problem is the epidemic of chronic disease.”

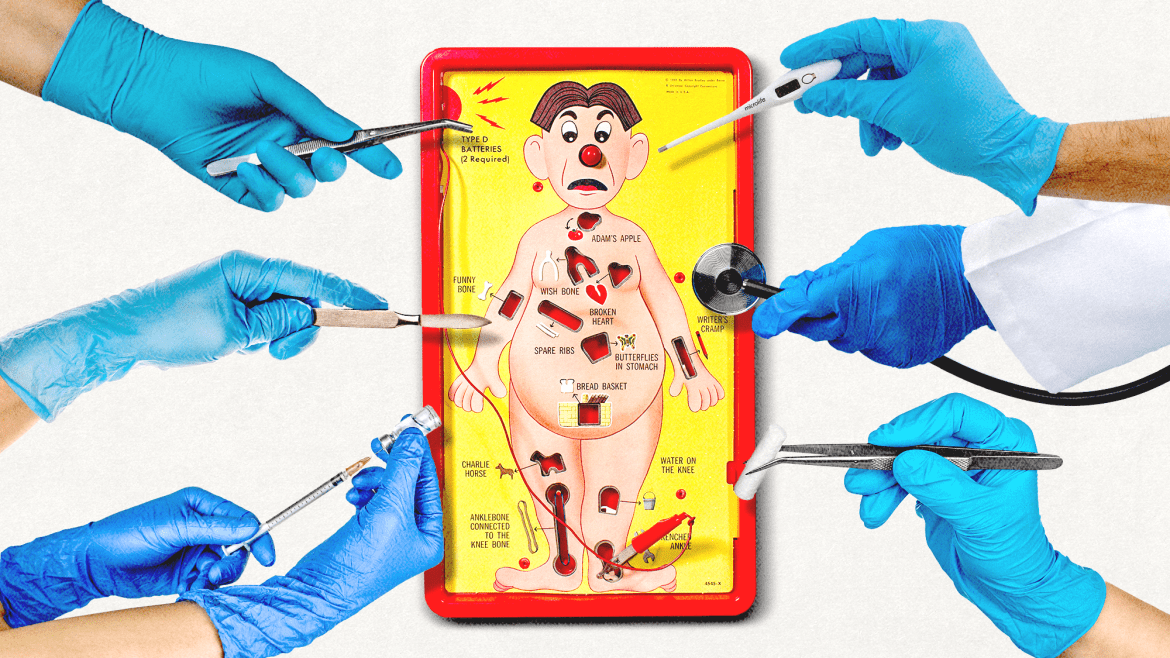

Meanwhile, the number of American men receiving testosterone-replacement therapy has grown by almost 30 percent from 2018 to 2022. In TRT, lab-synthesized testosterone is administered to patients in a variety of formats, including injections, pills, topical gels, and slow-dissolving pellets inserted under the skin of their buttocks or hips. Testosterone, which is a controlled substance, is approved only for the treatment of testosterone deficiency; a diagnosis requires blood work that shows levels below the normal range over multiple days and, crucially, corresponding symptoms. But it’s also easy to get for other reasons. Doctors can prescribe it off-label to men with normal T levels who complain of low energy, decreased libido, or erectile dysfunction. Some gym fanatics buy it, sometimes through illicit channels, to build muscle, which is the only undeniable effect of raising T in someone with normal levels, Morgentaler said. All of the experts I spoke with were dubious that low-T clinics follow standard medical practice for prescribing testosterone, including discussing potential risks with patients and employing hormone specialists. (I reached out to two large testosterone clinics, but they did not respond.)

[Read: Why are so many women being told their hormones are out of whack?]

Whether the goal of these clinics is to treat low T or jack levels up to the max isn’t clear. Many encourage men to aim for excessive T, Michael Irwig, an endocrinologist at Harvard Medical School, told me. A 2022 study of seven direct-to-consumer low-testosterone clinics found that three of them proposed a treatment goal of at least 1,000 nanograms per deciliter—one advertised a goal of 1,500. It should come as no surprise, then, that up to a third of men on TRT don’t have a deficiency, and that the majority of new testosterone users start treatment without completing the blood work needed for a diagnosis.

The maximalist approach to testosterone is risky. Although new research dismisses previous concerns about TRT causing cardiovascular disease and prostate cancer, too much of the hormone can elevate levels of hemoglobin, which raises the risk of blood clots, and estradiol, which can cause breast enlargement. It also causes testicular shrinkage and infertility. Although increasing testosterone can improve sexual performance, it has the opposite effect on reproduction, which is why the American Urological Association advises men to be cautious about TRT if they want to have kids. If a man begins testosterone therapy in his 20s and stays on it for upwards of five years, the chances that he’ll ever recover his original sperm levels are low, John Mulhall, a urologist at Memorial Sloan-Kettering Cancer Center, told me.

Plus, pumping the body full of testosterone may not alleviate the problems that patients set out to solve. Every patient responds to testosterone differently, Mauvais-Jarvis said; some men may feel perfectly fine at levels considered deficient, while others require far higher levels. The symptoms of deficiency could also be caused by any number of common ailments, such as obesity, cardiovascular disease, and depression. It’s not uncommon for a patient who still feels unwell after starting treatment to ask for more testosterone, Mulhall said. But if his problems have a different root cause, “pushing that man to a T level of 1,000 won’t improve his symptoms.”

[Read: Men might be the key to an American baby boom]

The current spotlight on testosterone could lead to better care for men in at least one way: If the FDA removes restrictions on the hormone, more patients will be able to get treatment from their doctors—many of whom are reluctant to prescribe controlled substances—instead of turning to dubious third-party clinics, Mauvais-Jarvis said. This would be especially beneficial for the men who do have a genuine T deficiency but are currently not receiving treatment.

The panic over testosterone seems unlikely to end anytime soon, in part because it is about not just men’s health, but also manhood. The Trump administration is obsessive about manliness. Trump himself—who shared his T level (a perfectly respectable 441 nanograms per deciliter) during his first presidential campaign—has been lauded by his fans as a paragon of masculinity. The message is clear: When it comes to testosterone, more is definitely more.